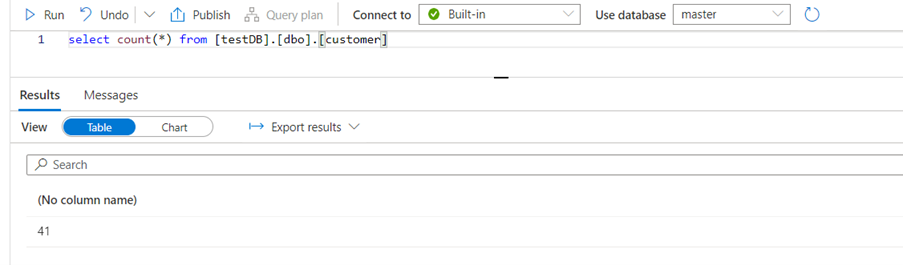

We have seen the implementation of the Lake Database in our previous blog, and we will see that the object created using the SPARK in the Lake Database can be easily queried using the Serverless SQL Pool.

This gives a benefit that the tables can be queried on the Ad-hoc basis without turning the SPARK pools up. So, it provides a way to explore the data either you are a Data Analyst, Data Scientist or you are working as a support professional in a Business Analytics team.

To see the working we will first create the SPARK Database, create a Delta table and then query the table from the Serverless.

Creating Spark DB:

%%pyspark spark.sql(“CREATE DATABASE IF NOT EXISTS testDB”)

Creating Delta table:

%%pyspark #creation of the delta table at the given location in the Delta Lake df = spark.read.load(‘abfss://test@stgteststorage.dfs.core.windows.net/landing/customer.parquet’, format=’parquet’) add= ‘abfss://test@stgteststorage.dfs.core.windows.net/tables/customer/’ df.write.option(“path”, add).format(“delta”).saveAsTable(testDB.customer)

But how is it possible to query the objects in the Lake Database from a different workspace? how it knows the structure of the table/objects?

This is actually possible due to the shared Metadata Model which is leveraged in synapse.

Whenever a SPARK External table or the Managed table is created backed by the parquet, CSV or delta format. it gets registered to the HIVE MetaStore under the hood and makes it appear like a traditional object in the database. Hive MetaStore uses the Derby as a backend database.

It takes seconds to make the table available to be queried using Serverless from the time it gets created.

When you register any table in the Lake DB by default the table name gets converted as lower case.

There is no limit for the numbers of Lake Database that can be created. However, on the other hand number limit for the creation of Serverless SQL pool is capped to 20.

The objects in the SPARK can be created with three ways. These way makes sure that the table is registered in the Hive MetaStore.

- The table can be immediately created by switching from .save() to .saveAsTable(). it creates the table with the same structure as the file or the DataFrame created on top of the file.

- You can use the SPARK SQL to create the Table to the location in Data Lake.

- you can use the SQL syntax after specifying the language in the magic command.

The view in the Lake Database cannot be queried using the Serverless SQL pool engine, it requires the SPARK engine.

Since we are seeing the SELECT property of the serverless to read the Lake Database objects but it is one way sync i.e. you cannot make the alteration in the table or view and expect to get sync with the SPARK or Hive MetaStore.

These changes can be done only by using the SPARK, Database Template or the Azure Link to Dataverse.