But it is lot more than that. So, what Lake Database actually is? In the Synapse workspace you can see the dropdown with a Lake database already present with the Name ‘default’.

Lake Database can be categorized as one of the below used cases.

- The Lake Database is the Database designed using the Database Template Designer. For example, the template includes the wide list of industries like Automotive, Health, Manufacturing etc. and a lot more pre-defined template.

- The Database linked with the Dataverse Link to Azure Synapse Analytics.

- It is the replicated Database which is associated with Spark that holds the Shared Metadata.

Now we have the area on which we can talk about the uses of Lake Database, we can start our discussion on each of these scenarios.

In above two mentioned scenarios the data is stored as a file on top of a Azure Data Lake Storage, Table in Dataverse but it gets registered as logical TABLE to synapse shared Metastore (/Shared Metadata Model) which can be treated as normal relational Database Object. Due to the Shared Metastore functionality it gives the ability to be queried as normal Database object instead of using the OPENROWSET.

It raises a question that if it doesn’t holds the data then what it actually contains. To answer this the Lake Database holds the Metadata information of the object like EXTERNAL TABLE DEFINITION, EXTERNAL TABLE LOCATION, SCHEMA INFORMATION, PARTITION INFORMATION when created. Due to this property it allows to query the tables as if they are the normal SQL objects.

Here another question may arise that if Lake Database can query file directly similar to the Serverless SQL POOL then how they both behave in nature.

if you consider as a top view they are quite similar in functionality. They both are being used to query the data stored in the Storage. But Lake Database has a additional feature due to its compatibility between the SPARK engine and the Serverless SQL engine.

However, the Serverless SQL pool can be seen as the traditional Azure SQL instance/dedicated SQL pool. It supports almost every object like EXTERNAL TABLES, EXTERNAL DATA SOURCES, VIEW, iTVF s, STORED PROCEDURES etc. but these functionality cannot be achieved using the Lake Database which is quite expected and is designed to work like this.

If we talk about the security provisioning to the objects then in Serverless we have Users, Roles that we can use to assign the access to the objects, also GRANT functionality which helps to restrict and provide the access to the granular level.

Since the Lake Database uses Azure Data Lake Storage to store the data, we can have the security to the File or Folder level. we can restrict the access by using RBAC and ACLs. We can discuss on the security aspects in our next blog.

But if we see the Lake Database as an overall with the features available then we can conclude that it is bit constrained. If we want to do alteration on the view or the table in the Lake Database using T-SQL then it is strictly not allowed. it needs to be managed by the above-mentioned templates that we defined like (Dataverse, SPARK or Database Templates).

However, the Lake Database is being exposed to the “write” operations and many of the object creation like stored procedure, iTVFs, views are now possible using the custom object creation.

What is Database Designer?

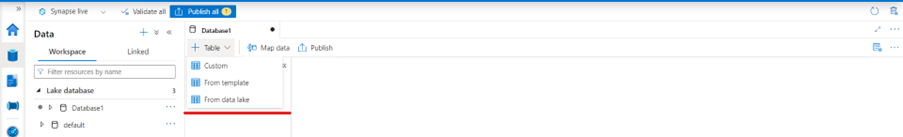

It can be opened by clicking on the ellipsis of the Lake DB on the option OPEN. The Database Designer gives you the nice window where you can design your models for your Lake Database from scratch by selecting the Custom option or from the Template or the last option is to directly go to your Lake choosing the option Custom and design the table right away. It actually gives you the rich list of features for each external table you create while you design your own model.

It allows you to label the tables and provides the field to give the description about the table and what is the purpose of it. So that in the future if anyone wants to check the model and wants to know the business logic of it then it will be right available.

The inability to model the relationship on the Data Lake has been removed by this integrated service within Azure Synapse.

Key Takeaways:

When to use:

- When you want to use the SPARK and Serverless SQL Pool interchangeably and wants the data to be queried using both SPARK and Serverless. you don’t need to duplicate the table in both the places. you can create views and perform complex queries by using only SPARK tables in the serverless and explore the data as per the business requirement.

- If you want to have your own logical Database with your custom model on the Data Lake or you can use the available templates in the Gallery.

- Explore the Data from Dataverse. it is not needed to export your Dataverse Data to Serverless. you can just connect and start browsing the tables you had in your Dataverse.

The Lake Database actually helps us to design a model on top of your Data Lake and allows us to model the relationship between them.

The Lake Database stores its data in the Data Lake in Azure Storage account. The data can be stored in the form of CSV, parquet or Delta. For each entity(table) a folder gets created underneath the database folder which you define while creating the Database as root folder.(we will talk in depth how the data gets stored in our next blogs)

The Lake Database leverages the Spark Engine and Serverless SQL pool to query the data. So, it actually decouples the storage and compute, and it uses the Shared metadata to query the tables from serverless SQL Pool.

Hi Diwakar, you have a great content. Please write on the Creation of Delta Lake as well.

Hi Kundan, you can follow along with the link https://blog.impulsewebsolution.com/delta-lake-solution-architecture/ and check the other blogs as well on Delta Lake.